I'm on the fence about this. Because while I understand artists wanting to protect their work, image recognition is a pretty important part of AI development. Ultimately, an AI has to be able to recognize images, and a good way to test their understanding is having them generate images, even if their final goal, is not to replace artists.

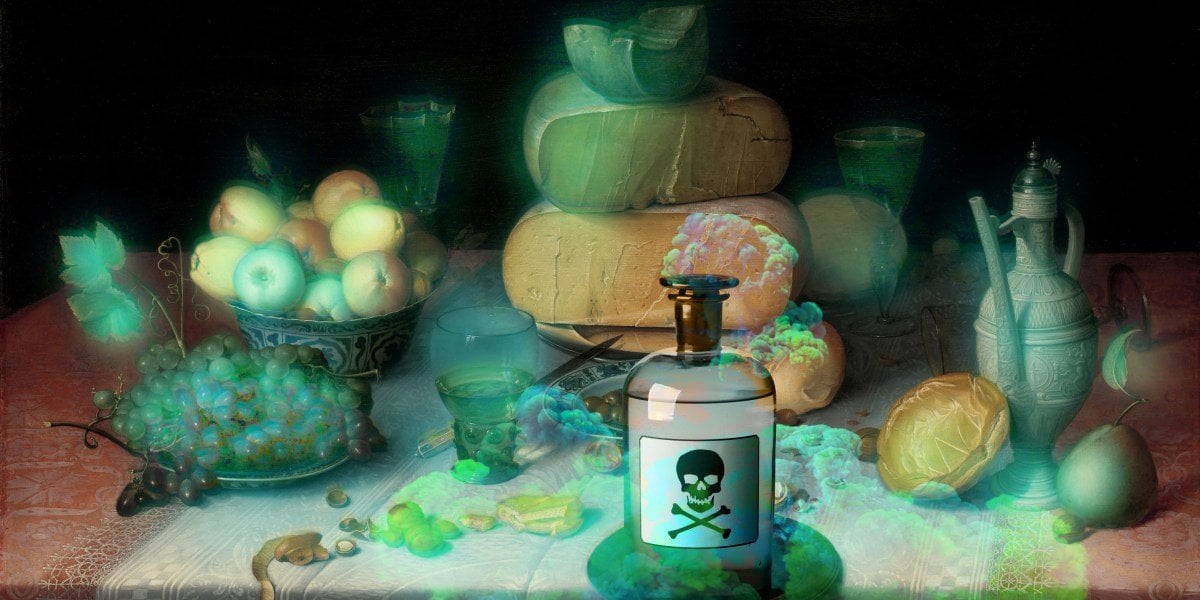

AI “art” is merely a fad, but the effect of AI in ending menial labor, and dangerous tasks is very real. But only if these AI's are accurate. In my opinion, it was a huge blunder to give these AI image generating tools to users, it was clearly a move to increase revenue, but it has also heavily hindered the future advancement of AI, through thing like data poisoning, and garnering a lot of hostility against AI.

Say you have an AI firefighter, who due to data poisoning, sprays a baby with CO2 thinking it was fire, and rescues a microwave thinking it was a person.

Or an AI operated truck, who appears to work perfectly at launch, but eventually poisoned data comes into it's training algorithm, and it starts to think stop signs are “no stopping” signs.

Surely this tactic is great at hindering AI “artists” from stealing real artist's styles, but I wonder if there's a way to still make sure AI moves forward so it can do jobs people actually don't want to do.